Conference Exhibitor Data Retrieval: A Selenium & BeautifulSoup Solution

in Python

In this project we leverage the Selenium and BeautifulSoup Python libraries to automate navigating a website using specific search critera, and extract detailed data from multiple pages of results. The objective was to obtain exhibitor contact information details from a conference website.

Project Overview

- Introduction: This project entailed developing a web scraper to systematically collect contact information of exhibitors from a conference website. The challenge was to navigate through the website's dynamic content and structure to access and extract relevant data efficiently.

- Problem Statement: Many conference websites feature detailed exhibitor lists, but accessing this information in a structured format for analysis or outreach purposes can be time-consuming. The goal was to automate this process, ensuring accuracy and efficiency in data collection. Specific search criteria was also required to be dynamically specified.

- Solution: I designed and implemented a web scraper using Selenium and BeautifulSoup in Python. Selenium automated browser interactions for navigating the website, handling elements like cookies, search criteria, and dealing with dynamically loaded content. BeautifulSoup was utilized for parsing HTML and extracting the required information, such as company names, contact details, and booth numbers.

- Technologies Used:

- Selenium: For automating web browser interaction, enabling the script to imitate human browsing behavior to access the required data.

- BeautifulSoup: For parsing HTML and extracting data, its powerful parsing capabilities simplified the process of sifting through complex webpage structures.

Key Features

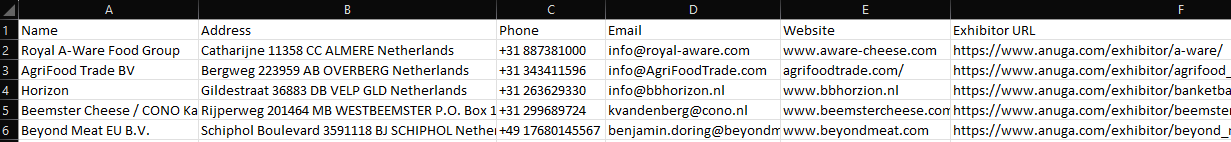

- The main fields that need to be scraped include:

- Exhibitor company name

- Address

- Phone number

- Website

- Exhibitor conference URL

- The custom search criteria of “Target and outlet markets” was set to “USA”, and “Country/Region of origin” was set to “Netherlands”.

- The results must be output to a properly formatted and cleaned CSV file

Project Details

As indicated in the problem statement, the data to be scraped is from conference exhibitors that meet the custom search criteria of “Target and outlet markets” set to “USA”, and “Country/Region of origin” set to “Netherlands”. Selenium was used to deal with a pop-up, and to navigate the advanced search options and select the desired criteria. As you can see from the image, our Python script was able to reduce the search results from 4355 down to 100:

Each exhibitor had their own page that included the required data, so we created a list of all exhibitor page URLs. Here we can see the functions used to grab the URL from the search results, and manage pagination:

# Function to grab all URL's

url_list = []

def grab_url():

all_urls = driver.find_elements(By.CLASS_NAME, 'initial_noline')

for u in all_urls:

url_list.append(u.get_attribute('href'))

# Function to go to next page

def next_page():

try:

element = WebDriverWait(driver, 5).until(

EC.presence_of_element_located((By.CSS_SELECTOR, 'a.slick-next'))

)

driver.execute_script("window.scrollTo(0,document.body.scrollHeight)")

driver.find_element(By.CSS_SELECTOR, 'a.slick-next').click()

time.sleep(3)

except Exception as error:

driver.quit()

global keep_running

keep_running = False

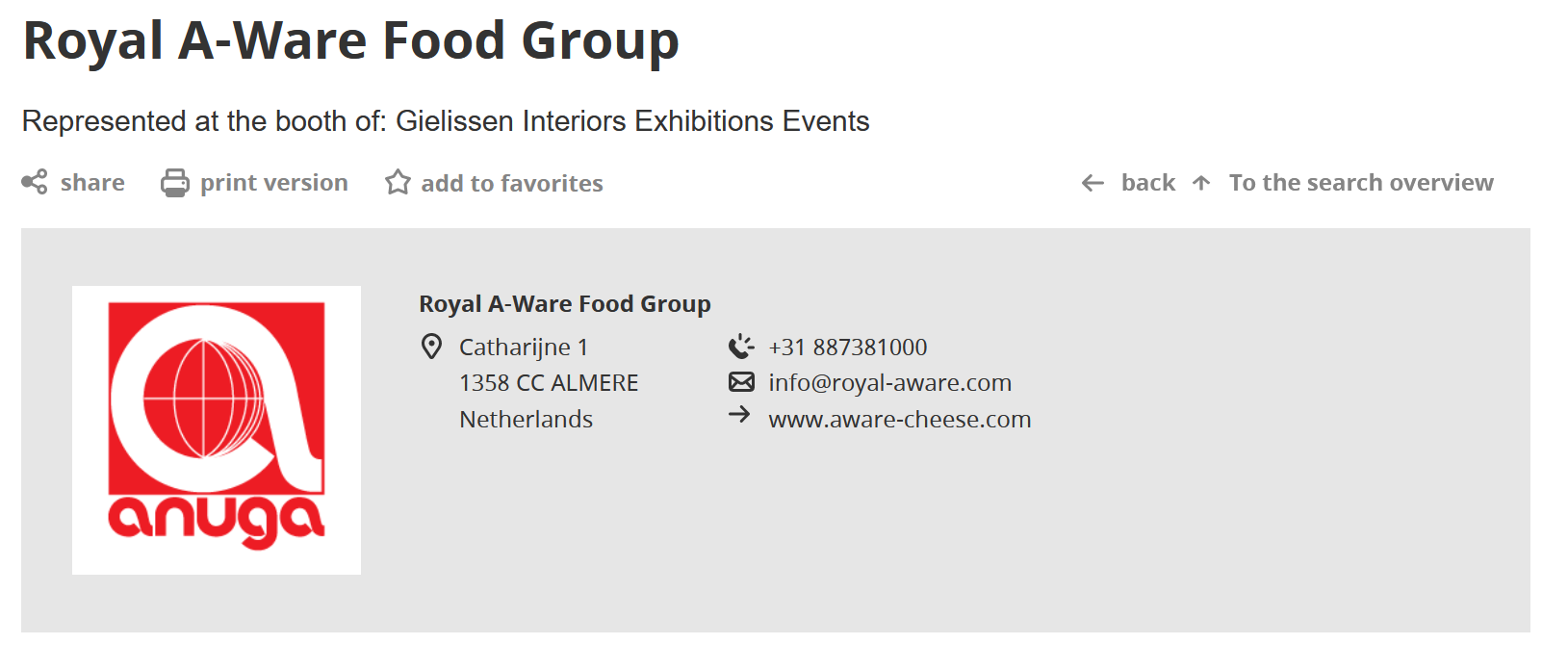

Once we had the list of individual URLs, we needed to extract the data from the fields. Here is an example of one exhibitor contact page:

We used the below code to determine if the exhibitor page exists (some listings were broken links on the site), and then a second function to pull the data into a DataFrame:

# Check if exhibitor page exists and add data to DataFrame

if soup.find(class_='hl_2').get_text().replace('\n', '') == 'Exhibitor and product search':

pass

else:

name = soup.find(class_='headline-title').get_text().replace('\n', '')

address = soup.find(class_='text-left').get_text().replace(' ', '').strip().replace('\n', '')

try:

phone = soup.find(class_='sico ico_phone').get_text()

except:

phone = None

email = soup.find(class_='xsecondarylink').get_text()

try:

website = soup.find(class_='sico ico_link linkellipsis').get_text().strip()

except:

website = None

exhibitor_url = url

driver.quit()

# Add all data to DataFrame row

df.loc[row] = [name, address, phone, email, website, exhibitor_url]

Once the script is run, we can see the final output in a CSV file: